Hierarchical Clustering Using A*

Exact and Approximate Hierarchical Clustering Using A*

More details here

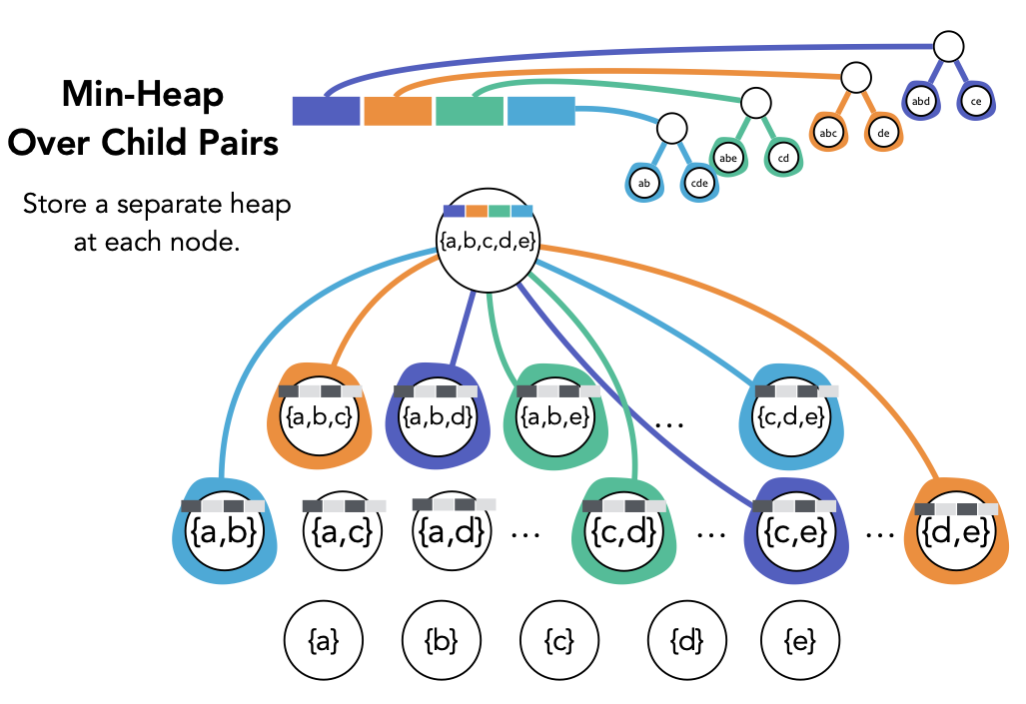

Hierarchical clustering is a critical task in numerous domains. Many approaches are based on heuristics and the properties of the resulting clusterings are studied post hoc. However, in several applications, there is a natural cost function that can be used to characterize the quality of the clustering. In those cases, hierarchical clustering can be seen as a combinatorial optimization problem. To that end, we introduce a new approach based on A* search. We overcome the prohibitively large search space by combining A* with a novel trellis data structure. This combination results in an exact algorithm that scales beyond previous state of the art, from a search space with 1012 trees to 1015 trees, and an approximate algorithm that improves over baselines, even in enormous search spaces that contain more than 101000 trees. We empirically demonstrate that our method achieves substantially higher quality results than baselines for a particle physics use case and other clustering benchmarks. We describe how our method provides significantly improved theoretical bounds on the time and space complexity of A* for clustering. The A* package provides all the data structures, algorithms, and experiments for MAP and Z computation for hierarchical clusteringis using A*.

Publications:

Exact and Approximate Hierarchical Clustering Using A*

Craig S Greenberg, Sebastian Macaluso, Nicholas Monath, Avinava Dubey, Patrick Flaherty, Manzil Zaheer, Amr Ahmed, Kyle Cranmer, Andrew McCallum

37th Conference on Uncertainty in Artificial Intelligence (UAI 2021) [ conference paper ] [ arXiv ] [ code ]